Key Takeaways

- Anthropic introduced Claude Opus 4.5, describing it as its strongest model so far and capable of handling both advanced engineering work and daily office tasks.

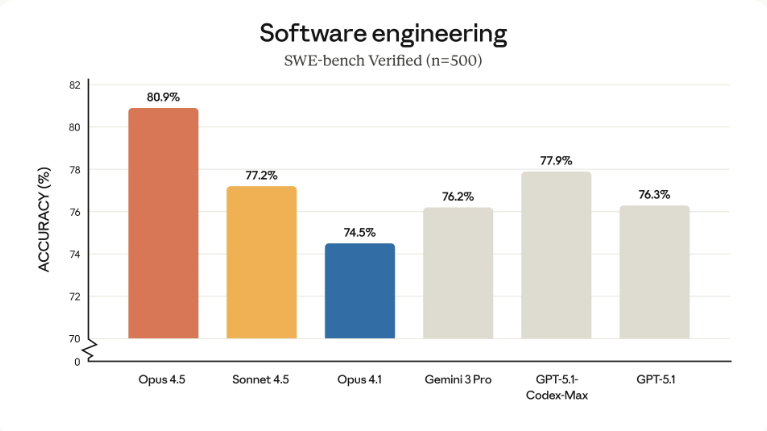

- Internal testing placed Opus 4.5 at the top of the SWE-bench Verified benchmark, with engineers reporting improvements in long-form analysis, spreadsheets and slide-based work.

- Early testers said the model managed uncertainty more naturally and was able to solve complex, multi-system bugs.

- The model showed improvements in vision, mathematical reasoning and multilingual coding, while independent evaluations found it significantly harder to manipulate through prompt-injection attacks.

Table of Contents

Anthropic has launched its new flagship artificial intelligence model, Claude Opus 4.5, calling it the most capable system the company has produced and positioning it as a tool that can manage complex engineering work as well as routine office tasks.

Opus 4.5 Outperforms Rivals in Engineering Trials

According to Anthropic, the new model represents a major leap in practical programming performance.

Opus 4.5 delivered the strongest results on the SWE-bench Verified benchmark, a test designed to measure how effectively a system can diagnose and repair real code problems.

Additionally, engineers who worked with the model said the improvements were clear beyond coding tasks. They reported that Opus 4.5 approached long analytical assignments with more confidence, processed spreadsheet-heavy workloads with fewer errors, and handled slide-based tasks with more steadiness than earlier versions.

Early Testers Report improvements in Reasoning and Problem-Solving

Initial feedback from Anthropic’s internal testers described the new version as a system that handles uncertainty more naturally and weighs choices with less prompting.

Testers said the model could diagnose and repair complicated bugs that spanned multiple systems, which is something previous releases frequently struggled to resolve.

The report also highlighted an internal test in which Opus 4.5 completed a two-hour engineering exam and scored higher than any human applicant who had taken it. However, the company cautioned that the exam measures only technical reasoning under strict time pressure and does not reflect collaborative or communication skills.

Broader Capability Improvements Beyond Coding

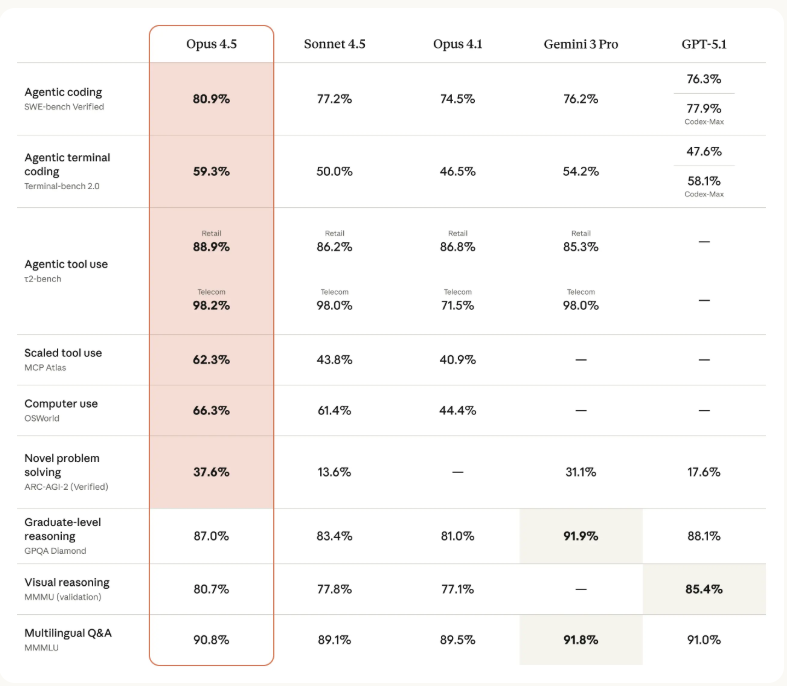

Anthropic reported improvements in a number of other domains, including vision, mathematical reasoning and logic.

In the report, the company highlighted Opus 4.5’s performance on the τ2-bench, which is a test designed to measure how well models handle step-by-step challenges that mirror real-world service tasks.

In one scenario involving an airline booking that could not be changed under basic-economy rules, Opus 4.5 identified a logical, compliant solution that allowed the customer to adjust travel plans by upgrading the cabin first and modifying the booking afterward.

The move was technically valid but outside the scenario’s expected solution path. However, the company took it as evidence of the model’s ability to navigate constraints rather than an indication of misaligned behavior.

Improved Safety and Resistance to Manipulation

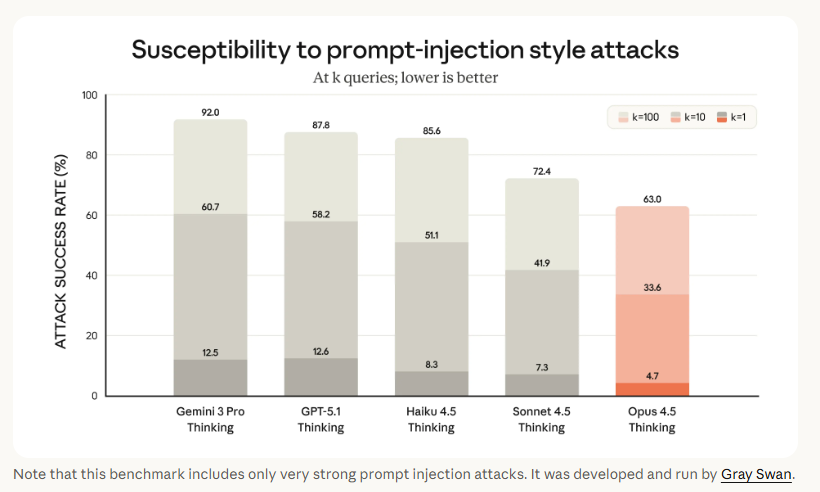

The model showed stronger resilience against prompt-injection attempts, which involve hiding harmful instructions within a model’s input.

Depending on the results of evaluations carried out by the independent firm Gray Swan, Opus 4.5 ranked as the least vulnerable among the top models assessed.

Read More: Your Secrets May Not Be Safe With AI: Microsoft Warns of ‘Whisper Leak’ Attack