Key Takeaways

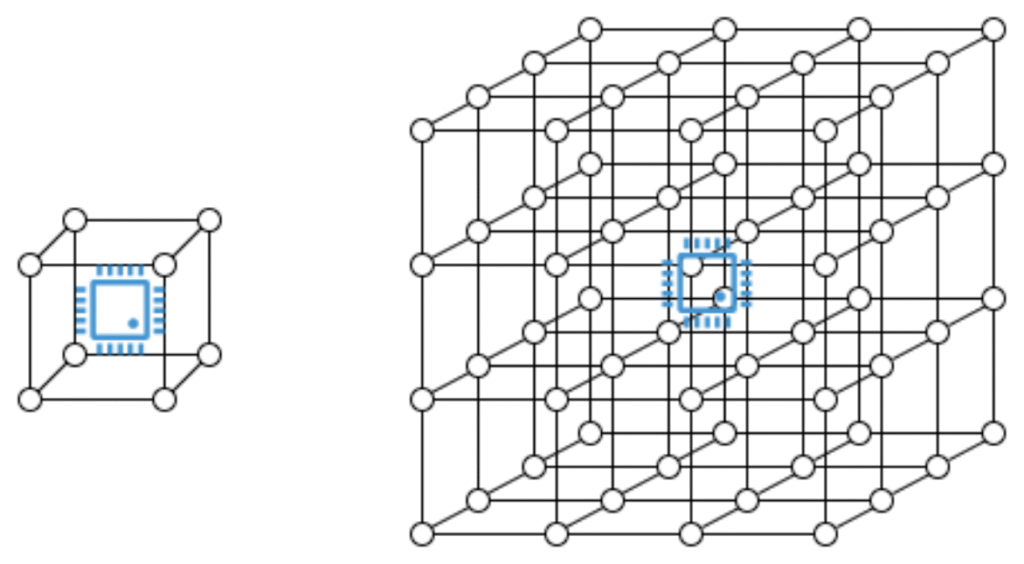

- Vitalik Buterin identifies a fundamental memory flaw slowing down blockchain and AI systems, demonstrating access time scales with the cube root of memory size.

- This O(N^⅓) relationship means doubling memory capacity increases access latency by approximately 25%.

- This has immediate implications for improving performance of cryptographic algorithms and optimal hardware design throughout Web3 and AI.

Table of Contents

A Paradigm Shift in Computing Efficiency

Ethereum co-founder Vitalik Buterin has unveiled a fundamental memory flaw slowing down blockchain and AI systems that questions assumptions of computer science that have been valid for decades. Notably, through a technical narrative, Buterin lays out a technical analysis that shows the memory access time is not constant and scales with the cube root of memory size, meaning larger memory systems inherently suffer from slower access times due to physical constraints like signal travel distance in three-dimensional space.

Read also: Ethereum Foundation Launches dAI Team to Power AI Agent Economy

Practical Implications in Cryptography and Scaling

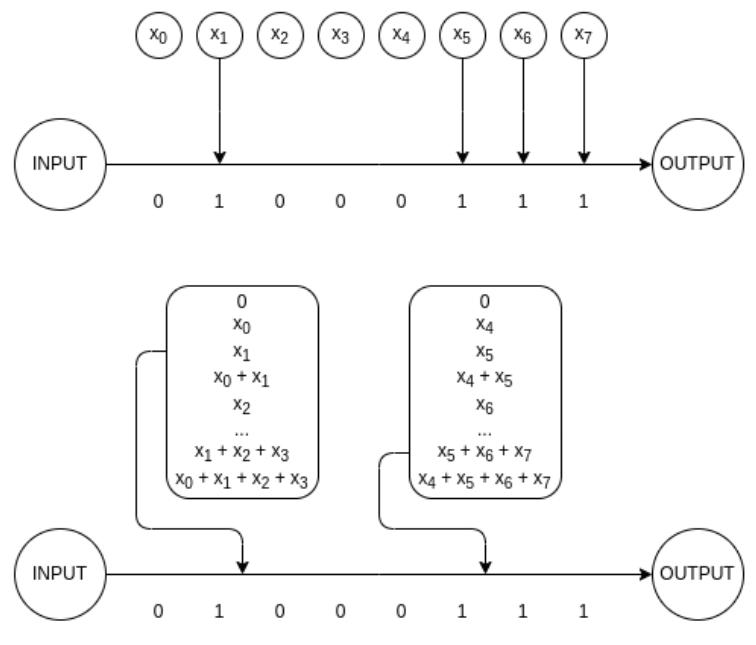

This memory flaw slowing down blockchain and AI systems, has direct and meaningful consequences for performance optimization. So far, Buterin shares examples from elliptic curve multiplication and mathematics in binary fields, which rely on precomputed tables to speed up cryptographic operations. To this point, his experiments have shown that smaller tables, which fit into cache memory, outperform larger ones stored in Random-Access Memory (RAM), despite the latter’s theoretical computational advantages, and disregard standard O(1) memory access models.

Redefining Hardware and Algorithm Design

The recognition of this memory flaw slowing down blockchain and AI systems necessitates a fundamental rethink of how we design computational infrastructure. Buterin claims future Application-Specific Integrated Circuits (ASICs) and Graphics Processing Units (GPUs) must emphasize the architecture of the memory being localized to help mitigate access latency.

For the Blockchain developer, this shift means a movement away from simply optimizing for raw computational power but optimizing for memory locality, potentially leading to more efficient consensus mechanisms and smarter smart contract designs that account for the true physics of memory access.

FAQs

What is the core discovery of the memory flaw slowing down blockchain and AI systems?

Vitalik Buterin proved memory access time scales as O(N^⅓), meaning 8x more memory results in 2x slower access, contradicting conventional O(1) assumption.

How does this affect blockchain technology?

It forces reconsideration of cryptographic algorithm implementations and hardware design, for instance, emphasizing memory locality over pure capacity.

Is this theoretical or empirically verified?

Buterin supported his theory with real-world latency measurements across Central Processing Unit (CPU) caches and RAM, showing remarkable alignment with his model.

For more Vitalik Buterin apports, read: Ethereum in Focus as Vitalik Proposes 16.7M Gas Cap to Boost Network Security