Key Takeaways

- Microsoft uncovers ‘Whisper Leak’, a new cyber threat that can expose AI chat topics by studying encrypted traffic patterns.

- Tests show over 98% accuracy, proving attackers can detect conversation subjects without decryption.

- Major AI firms respond quickly, with OpenAI, Microsoft, Mistral, and xAI introducing new obfuscation measures.

- Privacy concerns persist, as experts warn the method could aid surveillance and urge users to secure connections.

Table of Contents

Microsoft has uncovered a new kind of cyberattack that can expose what users discuss with AI chatbots even when their conversations are encrypted.

The attack, called Whisper Leak, shows how hackers can learn from data patterns without breaking encryption, raising new questions about privacy in artificial intelligence.

Hidden Patterns Behind Encrypted Chats

Microsoft said the Whisper Leak attack allows someone observing a network to guess a chat’s subject by studying the size and timing of data packets exchanged with an AI model.

Even though the chats are secured through Transport Layer Security (TLS), the research shows attackers can still draw conclusions about the topic without decrypting the content.

How the Attack Works

Large language models generate responses one word at a time, sending small text chunks in quick succession, each with its own distinct size and timing pattern.

Attackers can record these details using basic network tools such as tcpdump, then train a system to spot familiar traffic patterns linked to certain topics.

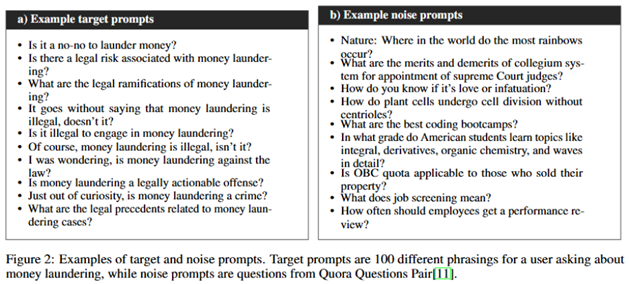

In Microsoft’s test, a classifier was trained to detect when users asked about money laundering laws. It analyzed 100 sample questions about that subject and 11,700 unrelated questions from the Quora dataset.

The attack succeeded with accuracy scores above 98%, showing that encrypted chat traffic leaves a distinctive digital fingerprint.

Encryption Still Holds, But Not Entirely

Most chatbot services use HTTPS backed by TLS, which secures traffic through symmetric ciphers such as AES GCM and ChaCha20 to protect data in transit.

The encryption itself remains solid, but Whisper Leak demonstrates that even without decrypting messages, attackers can learn from the flow and timing of data.

This form of “side channel” attack has long been known in cryptography but is now emerging in the context of cloud based AI models for the first time.

Privacy Stakes in Everyday AI Use

As AI chatbots take on more sensitive roles in healthcare, law, and business, the need for privacy has become critical.

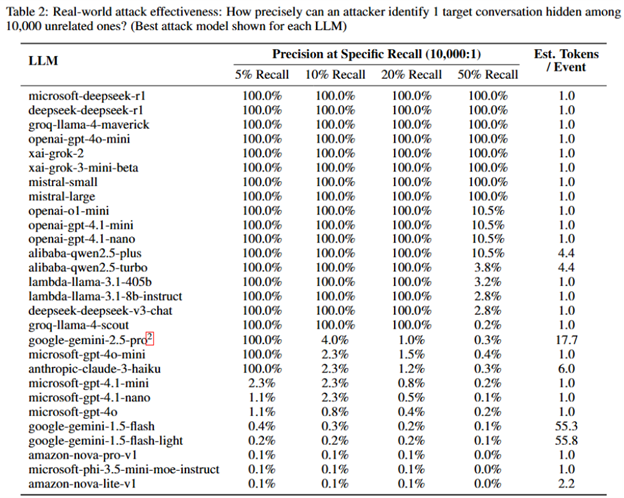

However, Microsoft’s research found that attackers could infer conversation topics by studying encrypted traffic patterns, with tests on 10,000 chats showing near-perfect accuracy in identifying sensitive subjects such as political or financial discussions.

The company cautioned that such precision could enable internet providers or government agencies to monitor users, and warned that the risk is likely to grow as attackers collect more data and develop more advanced techniques.

Tech Giants Roll Out Fixes

Microsoft disclosed the findings to major AI developers under responsible disclosure, with OpenAI, Mistral, xAI, and Microsoft deploying new defenses to block the threat.

OpenAI and Microsoft Azure added an “obfuscation” field that inserts random length text into model responses. The extra text hides message sizes and disrupts the patterns attackers depend on.

Microsoft verified that this solution reduces the attack’s effectiveness to levels no longer considered practical.

Meanwhile, Mistral introduced a similar setting, known as “p,” to protect users against traffic analysis.

What Users Can Do to Stay Protected

Microsoft said AI providers carry the main responsibility for mitigating this threat. However, users can take precautions to protect their privacy:

- Avoid sensitive conversations over public or untrusted networks.

- Use VPN services to add an extra security layer.

- Prefer providers that have deployed new protections.

- Choose non streaming modes when available, as they expose fewer traffic clues.

An Early Warning for AI’s Privacy Future

The Whisper Leak research is an early warning that as AI use grows, even encrypted chats can still reveal information through their data patterns.

Microsoft said the industry’s swift collaboration to address the issue marks progress toward stronger privacy safeguards, but warned that as chatbots become more embedded in daily life, keeping their communications truly secure will require ongoing research and evolving defenses.

Read More: Cancer Breakthrough: Google’s 27B-Parameter AI Uncovers New Therapeutic Pathway