Key Takeaways

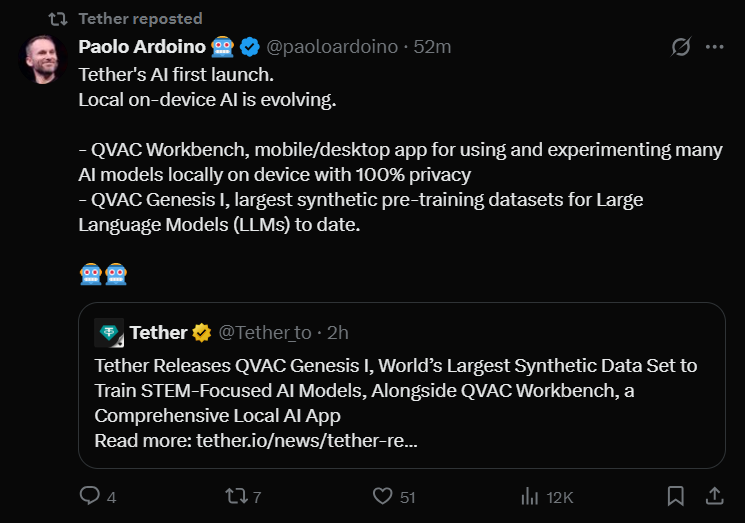

- Tether Data’s AI arm QVAC launched QVAC Genesis I, a 41-billion-token synthetic dataset — the largest of its kind — to train STEM-focused AI models.

- The dataset has been validated across key scientific benchmarks, showing strong reasoning and problem-solving performance in subjects such as math, physics, biology, and medicine, areas where open training data has been limited.

- Tether also released QVAC Workbench, a local AI platform that lets users run major AI models — including Llama, Qwen, Whisper, and others — directly on their own devices, keeping all data private and offline.

- CEO Paolo Ardoino said the initiative aims to decentralize artificial intelligence, giving researchers and individuals access to open, high-quality data and tools as an alternative to AI systems controlled by large technology firms.

Table of Contents

Tether Data’s artificial intelligence research arm, QVAC, on Friday unveiled what it described as the world’s largest and most advanced synthetic dataset for training AI systems, alongside a new application that enables users to run large language models locally on their own devices.

The dataset, named QVAC Genesis I, comprises 41 billion text tokens, making it the largest publicly available synthetic dataset designed for science, technology, engineering and mathematics (STEM) domains. Each token represents a fragment of text used to train AI systems to understand and generate language.

Tether said the dataset was carefully validated across a range of educational and scientific benchmarks, showing superior performance in mathematics, physics, biology and medicine. It aims to provide an open, high-quality training foundation in areas where existing public datasets remain limited.

The company also noted that QVAC Genesis I dataset was developed using a multi-stage generation and validation process that converts curated scientific and educational materials into structured learning data. The result, the company claims, helps models reason, solve problems and think critically rather than merely replicate text.

Commenting on the release, Paolo Ardoino, chief executive of Tether, said the initiative aims to return intelligence to the public domain, positioning QVAC Workbench and Genesis I as tools that allow AI to live, learn, and evolve locally on users’ own devices.

Alongside the dataset, the company released QVAC Workbench, a local AI platform that allows users to run a wide range of models directly on their own devices, keeping data private and under user control.

All interactions within the Workbench environment remain on-device, Tether said, ensuring that user data is not uploaded or shared. Additionally, A feature called “Delegated Inference” allows users to link mobile and desktop devices in a peer-to-peer connection to harness additional computing power.

“Most AI today sounds smart, but doesn’t truly think,” Ardoino said. “We designed this dataset to help models understand cause and effect, to make connections, draw conclusions, and reason their way through complexity. And we’re making it open to everyone.”

The launch comes amid a growing debate over the centralization of AI capabilities within a handful of major technology firms. Tether said its goal is to support “community-driven intelligence” and enable independent researchers to develop models capable of competing with proprietary systems.

Furthermore, both releases mark the first stage of QVAC’s broader mission to promote a “local intelligence” paradigm, where AI tools operate directly on personal devices instead of centralized cloud infrastructure.

What Is Synthetic Data?

Synthetic data refers to information that is algorithmically generated using models or simulations instead of being gathered directly from real-world sources.

Synthetic data can be used to replicate real data’s statistical properties, such as its patterns, distributions, and correlations, without copying the data itself. This makes it especially valuable when genuine datasets are limited, private, or costly to obtain, such as in medical or academic research.

Leading platforms note that synthetic data allows developers to train AI systems safely and efficiently, adding that it can both augment existing datasets or replace portions of them entirely.

When generated properly, it mirrors the behavior of real data while offering complete control over its composition, structure, and intended purpose.

What Is a “STEM-Focused Synthetic Dataset”?

A STEM-focused synthetic dataset is designed specifically to train artificial intelligence systems in the domains of Science, Technology, Engineering, and Mathematics (STEM).

In the case of Tether Data’s QVAC Genesis I, the dataset is designed for STEM applications and is generated artificially rather than collected from the real world.

It helps AI systems not only process language but also reason through complex scientific and technical problems.

How Is a STEM-Focused Synthetic Dataset Built and Validated?

Creating such a dataset typically involves multiple stages.

- Generation: Algorithms produce billions of “tokens” — the fragments of text that AI models use to learn language — based on scientific and educational templates. These tokens represent ideas, formulae, relationships, and conceptual patterns across STEM subjects.

- Validation and Benchmarking: The dataset is then tested against academic and scientific benchmarks to confirm its ability to support reasoning and problem-solving rather than rote repetition. In QVAC’s case, Tether reports validation across mathematics, physics, biology, and medicine.

- Public Release: Making the dataset openly available allows global researchers to access data that would otherwise remain locked in corporate systems.

- Usage: AI models trained on these materials can demonstrate stronger domain-specific reasoning — understanding cause and effect, making logical connections, and thinking through scientific complexity — instead of merely mimicking natural language.

The Benefits and Risks of Synthetic Data

A well-designed synthetic dataset offers notable advantages but also comes with important risks.

On the positive side, synthetic data broadens accessibility, giving researchers, educators, and smaller organizations the ability to work with large-scale, high-quality STEM data without depending on proprietary corporate sources.

Since it can be tailored to scientific reasoning, it helps AI models strengthen analytical and mathematical skills rather than merely imitate language.

It also enhances privacy and compliance, since the data contains no personal information and avoids many of the regulatory and ethical hurdles tied to real-world datasets.

Moreover, synthetic data can be structured deliberately to reduce bias, ensuring fairer representation of topics that may be underrepresented in natural data.

Yet the approach carries risks.

Quality control remains critical: poorly generated or unverified synthetic data can embed errors that distort model learning.

Experts also warn of model collapse, where systems trained primarily on synthetic material — particularly if it recycles outputs from previous AI models — can lose diversity and degrade in performance.

Additionally, models may face a simulation-to-reality gap, struggling to adapt when exposed to real-world information.

Excessive reliance on synthetic data can also lead to a loss of grounding, stripping away the nuance and unpredictability that come from authentic human-generated text.

In Summary

In essence, Tether’s push into synthetic data and local AI marks a clear step beyond its stablecoin origins, placing the company at the crossroads of digital tokens, open research and decentralized technology.

With the release of QVAC Genesis I and the Workbench platform, Tether reflects a broader shift in AI development away from centralized control and toward tools that give users greater autonomy.

Whether this approach can match the reach of Big Tech remains uncertain, but it highlights the rising demand for AI that is private, collaborative, and user-owned.

Read More: Cancer Breakthrough: Google’s 27B-Parameter AI Uncovers New Therapeutic Pathway